21 minutes

Creating an LXC Containerized Selenium Grid

Note: This is an old article which contains content that is out of date.

Note: This article suggests using Oracle’s Java JRE. Due to Oracle’s approach to licensing Java JRE, I now suggest using OpenJDK. OpenJDK has improved in leaps and bounds since the time the article was originally authored and I’m optimistic it will work.

Background

This guide shows you how to build one or more self-contained Selenium grids using:

- Ubuntu Server 14.04.3 LTS

- Selenium Server 2.48.2

- LXC containers for each grid node - this means we can run more tests in parallel without running into Selenium/Firefox mutex problems

- vnc4server and Openbox to provide a desktop - sometimes developers like to see the tests running when diagnosing issues

- Firefox 38.4.0 ESR

- Firebug to generate HAR (HTTP Archive files) - see http:/www.softwareishard.com/har/viewer/

- Optionally, GlusterFS to synchronise HAR files across multiple Selenium Grid hosts - Synchronising these files makes life easier for developers/devops when trying to diagnose problems

- Samba to make the Selenium logs and HAR files accessible from the network

The approach used here has been inspired by Dimitri Baeli’s Selenium in the Sky with LXC article on the comparethemarket.com’s tech blog. In this installation, the intent is providing developers fast feedback from a suite of Selenium Browser/API tests incorporated into a Continuous Integration/Deployment process. Thus we are provisioning Selenium grids for use in a private network. If you intend hosting a similar solution on cloud based infrastructure, you will need to take the necessary steps to secure communications and harden the operating systems.

I have opted to run multiple self-contained Selenium Grid’s. Each Grid runs on one physical server, with host operating system running a Selenium hub. Each server will then run multiple Selenium node instances, each of which will be running in their own LXC container.

Throughout this guide, “sghost0” is used to refer to the Selenium grid host server we’re building. You maybe building multiple Selenium grid host servers, in which case they are referred to as “sghost1”, “sghost2” etc.

This guide assumes you have a cleanly installed Ubuntu Server 14.04.3 host with OpenSSH installed. You will need root privileges either directly or via sudo. It’s also assumed that you have a working DNS integrated DHCP service.

Setup the LXC / selenium hub host (sghost0)

Set-up Bridged Networking

Install bridge-utils

$ sudo apt-get install -y bridge-utils

Edit the /etc/network/interfaces configuration file. We will use a dynamic IP for now.

$ sudo vi /etc/network/interfaces

Comment out the current primary network interface; change

auto eth0

iface eth0 inet dhcp

to

#auto eth0

#iface eth0 inet dhcp

And then add:

auto br0

iface br0 inet dhcp

bridge_ports eth0

bridge_fd 0

bridge_maxwait 0

Implement the change

$ sudo ifdown eth0 ; sudo ifup br0

Install and configure GlusterFS

This is an optional step - if you are setting up a single Selenium grid host, then you can skip this section. If you are planning to run two, three or four Selenium Grids, then using a GlusterFS volume for synchronising the Selenium log and HTTP Archive files can be beneficial. This configuration has not been tested with more than four Selenium Grids - I suspect that increase disk and network I/O could start to become a concern with more grids.

You might be asking why not run one “grid” comprising a hub on one host and nodes in LXC containers on all three/four hosts? I’ve found that a “distributed grid” results in tests that take much longer to run because they get delayed by the cross-network communcations between the hub and node Selenium instances. It’s better to ensure all the communication between the hub and the node remains on the host. The Linux kernel should ensure that network traffic between the host and the bridged LXC containers does not move onto the network. Our development team have produced a wrapper for the Selenium Web Driver used to run the tests which means they can be run against multiple grids in parellel.

Install:

$ sudo apt-get install glusterfs-common glusterfs-server glusterfs-client attr

Choose one Selenium grid host to be your primary GlusterFS node (e.g. “sghost0”). On that host run the following once for each other host:

$ sudo gluster peer probe <hostname>.yourdomain.yourtld

For example:

$ sudo gluster peer probe sghost1.yourdomain.yourtld

$ sudo gluster peer probe sghost2.yourdomain.yourtld

On each host, create the folders needed for Gluster fs

$ sudo mkdir /media/gluster-sel-har

$ sudo mkdir -p /var/log/selenium/har

$ sudo chmod -R 777 /media/gluster-sel-har

$ sudo chmod -R 777 /var/log/selenium

Back to the primary GlusterFS, “sghost0”. Create the GlusterFS distributed volume and set the necessary properties. Replace “replica 3” with the appropriate value for actual number of Selenium grid hosts you are planning to use.

$ sudo gluster volume create SelHarVol replica 3 transport tcp sghost0.yourdomain.yourtld:/media/gluster-sel-har sghost1.yourdomain.yourtld:/media/gluster-sel-har sghost2.yourdomain.yourtld::/media/gluster-sel-har sghost0.yourdomain.yourtld:/media/gluster-sel-har force

$ sudo gluster volume set SelHarVol server.allow-insecure on

$ sudo gluster volume set SelHarVol auth.allow "*"

$ sudo gluster volume start SelHarVol

#add to fstab on each host

$ localhost:/SelHarVol /var/log/selenium/har glusterfs defaults,nobootwait,_netdev,fetch-attempts=10 0 2

$ sudo mount -a

# Verify gluster volumes are running with bricks on each host

$ sudo gluster volume info all

Volume Name: SelHarVol

Type: Replicate

Volume ID: 3553fcf7-cf6f-49ee-8c15-e7e02a9309b7

Status: Started

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: sghost0.yourdomain.yourtld:/media/gluster-sel-har

Brick2: sghost1.yourdomain.yourtld:/media/gluster-sel-har

Brick3: sghost2.yourdomain.yourtld:/media/gluster-sel-har

Options Reconfigured:

server.allow-insecure: on

auth.allow: *

Install and Configure samba

Install samba

$ sudo apt-get install -y samba

Edit the configuration

$ sudo vi /etc/samba/smb.conf

Replace the contents of the file with the following:

[global]

workgroup = YOURDOMAIN.YOURTLD # or "WORKGROUP" if you don't have a domain

server string = %h server

wins support = no

dns proxy = no

log file = /var/log/samba/log.%m

max log size = 1000

panic action = /usr/share/samba/panic-action %d

server role = standalone server

encrypt passwords = true

passdb backend = tdbsam

unix password sync = no

map to guest = bad user

[selenium]

path = /var/log/selenium

browseable = yes

writeable = no

guest ok = yes

[firebug]

path = /var/log/selenium/har

browseable = yes

writeable = yes

guest ok = yes

Restart samba

$ sudo service smbd restart

$ sudo service nmbd restart

Install Java

Install Oracle/SUN Java. In my experience, Selenium does not play too well with openJDK

$ sudo apt-get install software-properties-common # Only required for Ubuntu Minimal installations

$ sudo apt-add-repository ppa:webupd8team/java

$ sudo apt-get update

$ sudo apt-get install -y oracle-java7-installer

In the last step you will need to confirm you are happy to accept the end user license agreement.

Install and configure Selenium server on the host

If you skipped installing and configuring GlusterFS, then create a folder for the selenium log files and make it world writeable.

$ sudo mkdir -p /var/log/selenium/har

$ sudo chmod -R 777 /var/log/selenium

Create a folder for the selenium-server jar file

$ sudo mkdir /opt/selenium-server

$ cd /opt/selenium-server

Download Selenium server

$ sudo wget http://selenium-release.storage.googleapis.com/2.48/selenium-server-standalone-2.48.2.jar

Create a shell script to start a Selenium node instance within the LXC containers

$ sudo vi start-selenium.sh

Add the following lines

#!/bin/bash

portNumber=$((5555 + $(echo -n $(hostname | grep -o -P '\d+$'))))

logFile=/var/log/selenium/node_$(hostname)_$(date +%Y%m%d).log

echo "$(date +%Y%m%d)T$(date +%H%M%S) : [selenium-server] Launching selenium-server-standalone on $(hostname)}" >> $logFile

java -jar /opt/selenium-server/selenium-server-standalone-2.48.2.jar -role node \

-hub http://$(hostname | grep -P -o '^\w*').yourdomain.yourtld:4444/grid/register -port $portNumber \

-maxSession 4 -registerCycle 5000 -timeout 150000 -browserTimeout 120000 \

-browser browserName=firefox,version=38.4.0,maxInstances=4,platform=LINUX &>> $logFile

Save and exit. Then make the script executable.

$ sudo chmod 755 start-selenium.sh

Later on we will mount the /opt/selenium and /var/log/selenium folders within the LXC containers. This affords a couple of benefits:

- We can upgrade selenium without touching the containers

- We have just one location in which to check log files when diagnosing problems with the selenium grid

- If we are using multiple hosts/grids, we can use glusterfs to synchronise the log folder and samba to make the share accessible to developers

Create a selenium user, specifying the group and user id

$ sudo adduser selenium -uid 1234 -gid 1234 # (password and now for...)

Adding user `selenium' ...

Adding new group `selenium' (1234) ...

Adding new user `selenium' (1234) with group `selenium' ...

Creating home directory `/home/selenium' ...

Copying files from `/etc/skel' ...

Enter new UNIX password:

Retype new UNIX password:

Sorry, passwords do not match

passwd: Authentication token manipulation error

passwd: password unchanged

Try again? [y/N] y

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

Changing the user information for selenium

Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n] y

Create a SystemV init script to start the selenium grid. Note the “GID” and “UID” variables set to ‘1234’.

$ sudo vi /etc/init.d/selenium-hub

#Insert the following text:

#! /bin/sh

### BEGIN INIT INFO

# Provides: selenium-hub

# Required-Start: $network $syslog

# Required-Stop: $syslog

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Starts Selenium Server hub instance

# Description: Run's a hub instance of Selenium Server in a

# Selenium Grid

### END INIT INFO

# Author: .BN <biscuitninja@someDomain.someTld>

PATH=/sbin:/usr/sbin:/bin:/usr/bin

DESC="Selenium Server - Hub"

NAME=selenium-hub

PIDFILE=/var/run/$NAME.pid

SCRIPTNAME=/etc/init.d/$NAME

JARFILE=/opt/selenium-server/selenium-server-standalone-2.48.2.jar

LOGFILE=/var/log/selenium/hub_$(hostname)_$(date +%Y%m%d).log

SELENIUM_ARGS="-role hub -log $LOGFILE -nodePolling 5000 -nodeStatusCheckTimeout 5000 -downPollingLimit 3 -unregisterIfStillDownAfter 30000 -browserTimeout 120000"

DAEMON=/usr/bin/java

DAEMON_ARGS="-jar $JARFILE $SELENIUM_ARGS"

# Use the selenium UID/GID

UID=1234

GID=1234

# Exit if the package is not installed

[ -s "$JARFILE" ] || (echo "${JARFILE} not found" && exit 0)

[ -x "$DAEMON" ] || (echo "${DAEMON} not installed" && exit 0)

# Read configuration variable file if it is present

[ -r /etc/default/$NAME ] && . /etc/default/$NAME

# Load the VERBOSE setting and other rcS variables

. /lib/init/vars.sh

# Define LSB log_* functions.

# Depend on lsb-base (>= 3.2-14) to ensure that this file is present

# and status_of_proc is working.

. /lib/lsb/init-functions

#

# Function that starts the daemon/service

#

do_start()

{

# Return

# 0 if daemon has been started

# 1 if daemon was already running

# 2 if daemon could not be started

start-stop-daemon --start --quiet --pidfile $PIDFILE --exec $DAEMON --test > /dev/null \

|| return 1

start-stop-daemon --start --quiet --pidfile $PIDFILE --chuid $UID:$GID --background --exec $DAEMON -- \

$DAEMON_ARGS \

|| return 2

# Add code here, if necessary, that waits for the process to be ready

# to handle requests from services started subsequently which depend

# on this one. As a last resort, sleep for some time.

}

#

# Function that stops the daemon/service

#

do_stop()

{

# Return

# 0 if daemon has been stopped

# 1 if daemon was already stopped

# 2 if daemon could not be stopped

# other if a failure occurred

start-stop-daemon --stop --quiet --retry=KILL/5 --pidfile $PIDFILE --name $NAME

RETVAL="$?"

[ "$RETVAL" = 2 ] && return 2

# Wait for children to finish too if this is a daemon that forks

# and if the daemon is only ever run from this initscript.

# If the above conditions are not satisfied then add some other code

# that waits for the process to drop all resources that could be

# needed by services started subsequently. A last resort is to

# sleep for some time.

start-stop-daemon --stop --quiet --oknodo --retry=KILL/5 --exec $DAEMON

[ "$?" = 2 ] && return 2

# Many daemons don't delete their pidfiles when they exit.

rm -f $PIDFILE

return "$RETVAL"

}

#

# Function that sends a SIGHUP to the daemon/service

#

do_reload() {

#

# If the daemon can reload its configuration without

# restarting (for example, when it is sent a SIGHUP),

# then implement that here.

#

start-stop-daemon --stop --signal 1 --quiet --pidfile $PIDFILE --name $NAME

return 0

}

case "$1" in

start)

[ "$VERBOSE" != no ] && log_daemon_msg "Starting $DESC" "$NAME"

do_start

case "$?" in

0|1) [ "$VERBOSE" != no ] && log_end_msg 0 ;;

2) [ "$VERBOSE" != no ] && log_end_msg 1 ;;

esac

;;

stop)

[ "$VERBOSE" != no ] && log_daemon_msg "Stopping $DESC" "$NAME"

do_stop

case "$?" in

0|1) [ "$VERBOSE" != no ] && log_end_msg 0 ;;

2) [ "$VERBOSE" != no ] && log_end_msg 1 ;;

esac

;;

status)

status_of_proc "$DAEMON" "$NAME" && exit 0 || exit $?

;;

#reload|force-reload)

#

# If do_reload() is not implemented then leave this commented out

# and leave 'force-reload' as an alias for 'restart'.

#

#log_daemon_msg "Reloading $DESC" "$NAME"

#do_reload

#log_end_msg $?

#;;

restart|force-reload)

#

# If the "reload" option is implemented then remove the

# 'force-reload' alias

#

log_daemon_msg "Restarting $DESC" "$NAME"

do_stop

case "$?" in

0|1)

do_start

case "$?" in

0) log_end_msg 0 ;;

1) log_end_msg 1 ;; # Old process is still running

*) log_end_msg 1 ;; # Failed to start

esac

;;

*)

# Failed to stop

log_end_msg 1

;;

esac

;;

*)

#echo "Usage: $SCRIPTNAME {start|stop|restart|reload|force-reload}" >&2

echo "Usage: $SCRIPTNAME {start|stop|status|restart|force-reload}" >&2

exit 3

;;

esac

:

Make the init script executable:

$ sudo chmod 755 /etc/init.d/selenium-hub

Add the new init script to the default run levels

$ sudo update-rc.d selenium-hub defaults

Start the service

$ sudo service selenium-hub start

Check the service is running

$ps -ef | grep selenium

selenium 1457 1 0 17:07 ? 00:00:01 /usr/bin/java -jar /opt/selenium-server/selenium-server-standalone-2.48.2.jar -role hub -log /var/log/selenium/hub_sghost0_20151110.log -nodePolling 5000 -nodeStatusCheckTimeout 5000 -downPollingLimit 3 -unregisterIfStillDownAfter 30000 -browserTimeout 120000

Install LXC on the Host

Install:

$ sudo apt-get install -y lxc

If you have configured apt not to install recommends, also install the recommended packages:

$ sudo apt-get install -y uidmap lxc-templates debootstrap

Create and Initialize a Container

Create a container named “sel-node-template”, running Ubuntu Trusty x64

$ sudo lxc-create -t ubuntu -n sel-node-template -- --release trusty --arch amd64

List containers

$ sudo lxc-ls

sel-node-template

Switch the container onto bridged networking (as opposed to NAT)

$sudo vi /var/lib/lxc/sel-node-template/config

Change the line

lxc.network.link = (lxcnet0|lxcbr0)

To

lxc.network.link = br0

Whilst we are here configure the folders from the host which we want to be directly accessible to the container@

lxc.mount.entry = /opt/selenium-server opt/selenium-server none defaults,bind,optional,create=dir 0 0

lxc.mount.entry = /var/log/selenium var/log/selenium none defaults,bind,optional,create=dir 0 0

Save changes and close the file.

Start the container

$ sudo lxc-start -n sel-node-template -d

Connect to container

$ sudo lxc-attach -n sel-node-template

Setup LXC / Selenium Grid Node Container

Install X (Openbox)

Update

root@sel-node-template$ apt-get update ; apt-get upgrade -y

Apt tweaks

root@sel-node-template$ vi /etc/apt/apt.conf.d/99tweaks

Insert the following text into the file

APT

{

Install-Recommends "false";

Install-Suggests "false";

}

Save changes and close the file.

Install openbox, vnc4server, etc…

root@sel-node-template$ apt-get install -y openbox xinit xorg vnc4server tint2 haveged

Install SUN Java (not openJDK)

root@sel-node-template$ apt-get install software-properties-common

root@sel-node-template$ apt-add-repository ppa:webupd8team/java

root@sel-node-template$ apt-get update

root@sel-node-template$ apt-get install -y oracle-java7-installer

Configure Openbox

Make xinitrc executable

root@sel-node-template$ chmod 755 /etc/X11/xinit/xinitrc

Add a selenium user

root@sel-node-template$ adduser selenium --uid 1234 --gid 1234 # (password and now for...)

root@sel-node-template$ su - selenium

Tweak the Openbox menu

selenium@sel-node-template$ mkdir -p ~/.config/openbox

selenium@sel-node-template$ cp /etc/xdg/openbox/menu.xml ~/.config/openbox/.

selenium@sel-node-template$ vi ~/.config/openbox/menu.xml

Insert the following lines after “Web Browser”

<item label="Selenium Server">

<action name="Execute"><execute>/opt/selenium-server/start-selenium.sh</execute></action>

</item>

Delete the following lines…

<item label="ObConf">

<action name="Execute"><execute>obconf</execute></action>

</item>

<item label="Restart">

<action name="Restart" />

</item>

<separator />

<item label="Exit">

<action name="Exit" />

</item>

…but not

<item label="Reconfigure">

<action name="Reconfigure" />

</item>

Save changes and close the file.

Configure Openbox start up applications

selenium@sel-node-template$ vi ~/.config/openbox/autostart

Add the following lines, save, close

# Run tint2 to provide a taskbar within the desktop environment

/usr/bin/tint2 &

# Start a Selenium grid instance

/opt/selenium-server/start-selenium.sh &

Make the autostart script executable

selenium@sel-node-template$ chmod 764 ~/.config/openbox/autostart

Create a desktop folder

selenium@sel-node-template$ mkdir ~/Desktop

Configure vnc4server

Run vnc4server and specify a password (e.g. “123456”) - we’re not going to use any security as the container will only be accessible from a local network but vnc4server still requires a password on first use. Additionally, ignore the warning that “xauth: file /home/selenium/.Xauthority does not exist” does not exist. This is for information only as vnc4server automatically creates the file.

selenium@sel-node-template$ vnc4server

Kill vnc4server.

selenium@sel-node-template$ vnc4server -kill :1 #Kill off the instance of vnc4server we just started

selenium@sel-node-template$ vi ~/.vnc/xstartup

Uncomment lines 4 & 5. Save changes. Quit

Remove the log file that vnc4server has just generated.

selenium@sel-node-template$ rm .vnc/*\:1.log

Close the selenium user’s shell selenium@sel-node-template$ exit

Install Firefox ESR

Lets first grab the dependencies Firefox needs:

root@sel-node-template$ apt-get install libasound2 libasound2-data libgtk2.0-0 libgtk2.0-common

root@sel-node-template$ cd /opt

root@sel-node-template$ wget https://ftp.mozilla.org/pub/firefox/releases/38.4.0esr/linux-x86_64/en-GB/firefox-38.4.0esr.tar.bz2

root@sel-node-template$ tar -xjf firefox*.tar.bz2

root@sel-node-template$ rm firefox*.tar.bz2

root@sel-node-template$ mv firefox firefox38_4_0/.

root@sel-node-template$ ln -s /opt/firefox38_4_0/firefox /usr/bin/firefox

root@sel-node-template$ ln -s /usr/bin/firefox /usr/bin/x-www-browser

Add an init script to start xvncserver

Initially, grab the user id and group id of the selenium user:

root@sel-node-template$ grep selenium /etc/passwd

selenium:x:1001:1001:,,,:/home/selenium:/bin/bash

Create the SystemV init script:

root@sel-node-template$ vi /etc/init.d/vnc4server

Paste the following, noting the UID and GID that match the selenium user.

#! /bin/sh

### BEGIN INIT INFO

# Provides: vnc4server

# Required-Start: selenium-hub havaged

# Required-Stop: $remote_fs $syslog

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Starts selenium-grid node within XVNC instance

# Description: Starts an vnc4server VNC-XWindow instance, which

# in turn starts a selenium-grid node

### END INIT INFO

# Author: .BN <biscuitninja@someDomain.someTld>

DISPLAY="1"

PATH=/sbin:/usr/sbin:/bin:/usr/bin

DESC="selenium-grid node/XVNC instance"

NAME="vnc4server-${DISPLAY}"

DAEMON=/usr/bin/vnc4server

DAEMON_ARGS="-geometry 1600x900 -SecurityTypes None :${DISPLAY}"

DAEMON_KILL_ARGS="-kill :${DISPLAY}"

PIDFILE=/var/run/$NAME.pid

SCRIPTNAME=/etc/init.d/$NAME

# Use the selenium UID/GID

UID=1234

GID=1234

# Set value for home directory used by vnc4server

export HOME=/home/selenium

# Exit if the package is not installed

[ -s "$JARFILE" ] || (echo "${JARFILE} not found" && exit 0)

[ -x "$DAEMON" ] || (echo "${DAEMON} not installed" && exit 0)

# Read configuration variable file if it is present

[ -r /etc/default/$NAME ] && . /etc/default/$NAME

# Load the VERBOSE setting and other rcS variables

. /lib/init/vars.sh

# Define LSB log_* functions.

# Depend on lsb-base (>= 3.2-14) to ensure that this file is present

# and status_of_proc is working.

. /lib/lsb/init-functions

#

# Function that starts the daemon/service

#

do_start()

{

# Return

# 0 if daemon has been started

# 1 if daemon was already running

# 2 if daemon could not be started

start-stop-daemon --start --quiet --pidfile $PIDFILE --exec $DAEMON --test > /dev/null \

|| return 1

start-stop-daemon --start --quiet --pidfile $PIDFILE --chuid $UID:$GID --exec $DAEMON -- \

$DAEMON_ARGS \

|| return 2

# Add code here, if necessary, that waits for the process to be ready

# to handle requests from services started subsequently which depend

# on this one. As a last resort, sleep for some time.

}

#

# Function that stops the daemon/service

#

do_stop()

{

$DAEMON $DAEMON_KILL_ARGS

RETVAL="$?"

[ "$RETVAL" != 0 ] && return 2

rm -f $PIDFILE

return "$RETVAL"

}

#

# Function that sends a SIGHUP to the daemon/service

#

do_reload() {

#

# If the daemon can reload its configuration without

# restarting (for example, when it is sent a SIGHUP),

# then implement that here.

#

start-stop-daemon --stop --signal 1 --quiet --pidfile $PIDFILE --name $NAME

return 0

}

case "$1" in

start)

[ "$VERBOSE" != no ] && log_daemon_msg "Starting $DESC" "$NAME"

do_start

case "$?" in

0|1) [ "$VERBOSE" != no ] && log_end_msg 0 ;;

2) [ "$VERBOSE" != no ] && log_end_msg 1 ;;

esac

;;

stop)

[ "$VERBOSE" != no ] && log_daemon_msg "Stopping $DESC" "$NAME"

do_stop

case "$?" in

0|1) [ "$VERBOSE" != no ] && log_end_msg 0 ;;

2) [ "$VERBOSE" != no ] && log_end_msg 1 ;;

esac

;;

status)

status_of_proc "$DAEMON" "$NAME" && exit 0 || exit $?

;;

#reload|force-reload)

#

# If do_reload() is not implemented then leave this commented out

# and leave 'force-reload' as an alias for 'restart'.

#

#log_daemon_msg "Reloading $DESC" "$NAME"

#do_reload

#log_end_msg $?

#;;

restart|force-reload)

#

# If the "reload" option is implemented then remove the

# 'force-reload' alias

#

log_daemon_msg "Restarting $DESC" "$NAME"

do_stop

case "$?" in

0|1)

do_start

case "$?" in

0) log_end_msg 0 ;;

1) log_end_msg 1 ;; # Old process is still running

*) log_end_msg 1 ;; # Failed to start

esac

;;

*)

# Failed to stop

log_end_msg 1

;;

esac

;;

*)

#echo "Usage: $SCRIPTNAME {start|stop|restart|reload|force-reload}" >&2

echo "Usage: $SCRIPTNAME {start|stop|status|restart|force-reload}" >&2

exit 3

;;

esac

:

Make the new init script executable

root@sel-node-template$ chmod 755 /etc/init.d/vnc4server

Add the new init script to the default run levels

root@sel-node-template$ update-rc.d vnc4server defaults

Free some space:

root@sel-node-template$ vi /var/lib/locales/supported.d/en Delete all the locales except the one listed in /etc/default/locale. Save changes and close the file.

Exit and stop the container

root@sel-node-template$ exit

$ sudo lxc-stop -n sel-node-template

Or just shut it down:

root@sel-node-template$ shutdown -P now

Automate the Cloning and Start-up of the Containers

First, lets create a new location for storing our initial template:

$ sudo mkdir /var/lib/lxc-base

Then lets create a compressed archive in which to store the template container

$ sudo -i

$ cd /var/lib/lxc

$ tar --numeric-owner -czvf /var/lib/lxc-base/sel-node-template.tar.gz sel-node-template

$ exit # sudo session

Create a SystemV init script to automatically start the Selenium grid containers on boot

$ sudo vi /etc/init.d/selenium-containers

Insert the following text into the file, then save and close it:

#! /bin/bash

### BEGIN INIT INFO

# Provides: selenium-containers

# Required-Start: $local_fs $syslog $network

# Required-Stop: $local_fs $syslog

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Selenium Containers

# Description: Clones and starts LXC selenium grid node

# containers.

### END INIT INFO

# Author: .BN <biscuitninja@someDomain.someTld>

PATH=/sbin:/usr/sbin:/bin:/usr/bin

DESC="selenium-containers"

NAME="lxc-start"

DAEMON="/usr/bin/${lxc-start}"

DAEMON_ARGS="-d"

SCRIPTNAME=/etc/init.d/$NAME

# Exit if the package is not installed

/usr/bin/dpkg-query -W lxc &>/dev/null || exit 0

# Selenium/LXC specific variables

# Name of template container:

SEL_LXC_TEMPLATE_CONTAINER="sel-node-template"

# Path to template archive file:

SEL_LXC_TEMPLATE_ARCHIVE="/var/lib/lxc-base/sel-node-template.tar.gz"

# Number of clone containers to instantiate:

SEL_LXC_NUMBER_CLONES=2

# Exit if template archive not available

[ -f $SEL_LXC_TEMPLATE_ARCHIVE ] || exit 0

. /lib/lsb/init-functions

do_pre_start()

{

log_daemon_msg "Executing ${DESC} do_pre_start" "$NAME"

if [ ! -d "/var/lib/lxc/sel-node-template" ]; then

log_daemon_msg "Template not initialised" "$NAME"

log_action_begin_msg "Extracting template"

/bin/tar -xzf $SEL_LXC_TEMPLATE_ARCHIVE -C /var/lib/lxc

log_action_end_msg $?

fi

for (( i=1; i<=$SEL_LXC_NUMBER_CLONES; i++)) ; do

if [ -d /var/lib/lxc/$(hostname)-$i ]; then

log_daemon_msg "LXC Selenium container $(hostname)-${i} still loiters around from prior run" "$NAME"

log_action_begin_msg "Cleaning up"

rm -rf /var/lib/lxc/$(hostname)-$i

log_action_end_msg $?

fi

log_action_begin_msg "Cloning LXC Selenium container $(hostname)-${i}"

/usr/bin/lxc-clone -o sel-node-template -n "$(hostname)-${i}" >/dev/null

log_action_end_msg $?

done

}

do_start()

{

# Return

# 0 if daemon has been started

# 1 if daemon was already running

# 2 if daemon could not be started

/usr/bin/lxc-info -n "$(hostname)-${1}" 2>/dev/null | /bin/grep "RUNNING" &>/dev/null && return 1

log_action_begin_msg "Starting LXC Selenium container $(hostname)-${i}"

/usr/bin/lxc-start -n "$(hostname)-${1}" -d || return 2

log_action_end_msg $?

}

do_start_daemons()

{

do_pre_start

OVERALL_RESULT=0

for((i=1; i<=$SEL_LXC_NUMBER_CLONES; i++)) ; do

do_start $i

RESULT=$?

[ $RESULT -gt $OVERALL_RESULT ] && OVERALL_RESULT=$RESULT

[ $RESULT -eq 2 ] && log_failure_msg "Starting $DESC (${NAME} $(hostname)-${i}) failed"

[ $RESULT -eq 1 ] && log_warning_msg "$DESC (${NAME} $(hostname)-${i}) already running ?"

done

return $OVERALL_RESULT

}

do_stop()

{

# Return

# 0 if daemon has been stopped

# 1 if daemon was already stopped

# 2 if daemon could not be stopped

/usr/bin/lxc-info -n "$(hostname)-${1}" 2>/dev/null | /bin/grep "RUNNING" &>/dev/null || return 1

log_action_begin_msg "Stopping LXC Selenium container $(hostname)-${i}"

/usr/bin/lxc-stop -n "$(hostname)-${1}" || return 2

log_action_end_msg $?

}

do_post_stop()

{

for((i=1; i<=$SEL_LXC_NUMBER_CLONES; i++)) ; do

log_action_begin_msg "Destroying LXC Selenium container $(hostname)-${i}"

/usr/bin/lxc-destroy -n "$(hostname)-${i}"

log_action_end_msg $?

done

log_action_begin_msg "Destroying LXC Selenium container ${SEL_LXC_TEMPLATE_CONTAINER}"

/usr/bin/lxc-destroy -n $SEL_LXC_TEMPLATE_CONTAINER

log_action_end_msg $?

}

do_stop_daemons()

{

OVERALL_RESULT=0

for((i=1; i<=$SEL_LXC_NUMBER_CLONES; i++)) ; do

do_stop $i

RESULT=$?

[ $RESULT -gt $OVERALL_RESULT ] && OVERALL_RESULT=$RESULT

[ $RESULT -eq 2 ] && log_failure_msg "Stopping $DESC (${NAME} $(hostname)-${i}) failed"

[ $RESULT -eq 1 ] && log_warning_msg "$DESC (${NAME} $(hostname)-${i} already stopped ?"

done

if [ $OVERALL_RESULT -eq 0 ] ; then

do_post_stop

fi

return $OVERALL_RESULT

}

get_status()

{

/usr/bin/lxc-info -n "$(hostname)-${1}" 2>/dev/null | /bin/grep "RUNNING" &>/dev/null

STATUS="$?"

if [ "$STATUS" = 0 ]; then

log_success_msg "$DESC (${NAME} $(hostname)-${i}) is running"

return 0

else

log_failure_msg "$DESC (${NAME} $(hostname)-${i}) is not running"

return $status

fi

}

get_daemon_statuses()

{

OVERALL_RESULT=0

for (( i=1; i<=$SEL_LXC_NUMBER_CLONES; i++)) ; do

get_status $i

RESULT=$?

[ $RESULT -gt $OVERALL_RESULT ] && OVERALL_RESULT=$RESULT

done

return $OVERALL_RESULT

}

case "$1" in

start)

[ "$VERBOSE" != no ] && log_daemon_msg "Starting ${DESC} (${NAME} $(hostname)-*)" "${NAME}"

do_start_daemons

case "$?" in

0|1) [ "$VERBOSE" != no ] && log_end_msg 0 ;;

2) [ "$VERBOSE" != no ] && log_end_msg 1 ;;

esac

;;

stop)

[ "$VERBOSE" != no ] && log_daemon_msg "Stopping ${DESC} (${NAME} $(hostname)-*)" "${NAME}"

do_stop_daemons

case "$?" in

0|1) [ "$VERBOSE" != no ] && log_end_msg 0 ;;

2) [ "$VERBOSE" != no ] && log_end_msg 1 ;;

esac

;;

status)

get_daemon_statuses && exit 0 || exit $?

##status_of_proc "$DAEMON" "$NAME" && exit 0 || exit $?

;;

restart|force-reload)

#

# If the "reload" option is implemented then remove the

# 'force-reload' alias

#

log_daemon_msg "Restarting ${DESC} (${NAME} $(hostname)-*)" "${NAME}"

do_stop_daemons

case "$?" in

0|1)

do_start_daemons

case "$?" in

0) log_end_msg 0 ;;

1) log_end_msg 1 ;; # Old process is still running

*) log_end_msg 1 ;; # Failed to start

esac

;;

*)

# Failed to stop

log_end_msg 1

;;

esac

;;

*)

echo "Usage: ${SCRIPTNAME} {start|stop|status|restart|force-reload}" >&2

exit 3

;;

esac

:

Make the new init script executable:

$ sudo chmod 750 /etc/init.d/selenium-containers

Add the script to the default rc run levels:

$ sudo update-rc.d selenium-containers defaults

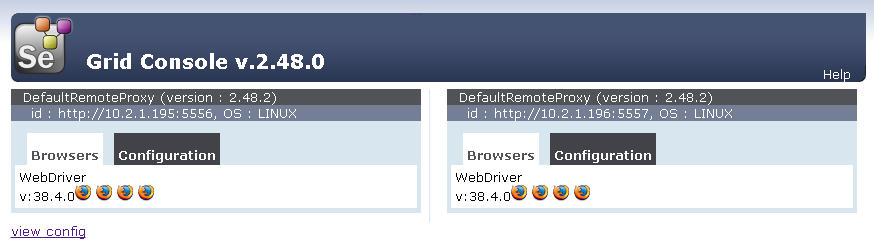

And that’s it. Restart your Selenium grid server, visit http://sghost0:4444/grid/console and you should see your grid up and running, something like this:

Further Considerations

TmpFS

I had intended to clone the containers to TmpFS (RAM disk) backed storage in order to reduce the time it takes to start the FireFox browser at the start of a test fixture. I couldn’t get this working on Ubuntu Server 14.04.3 with LXC 1.0.8 but it did work with Ubuntu Server 15.10 and LXC 1.1.4

Snapshot based LXC Clones

A further avenue for exploration, if continuing to rely on disk backed LXC containers is using a snapshot clones instead of copy clones. This means switching backing store to aufs, btrfs or LVM.