6 minutes

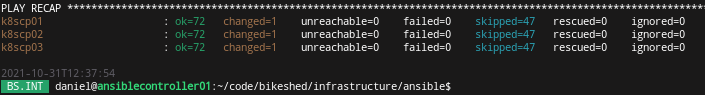

Creating a Kubernetes Cluster Using Keepalived and Haproxy With Ansible

Background

In the never-ceasing pursuit of knowledge acquisition, I built a Kubernetes cluster.

This is something I’m evaluating in my homelab, and with much knowledge acquired by trial and error, I thought it sensible to have Ansible roles written so I could freely blow away and re-create the cluster.

Disclaimer

I am new to Kubernetes. This article is a reflection on what I’ve learned. It is not intended to be an authoritative or archetypal guide.

By all means use the information provided, but please take the time to verify and test your deployment before using it for any critical workloads.

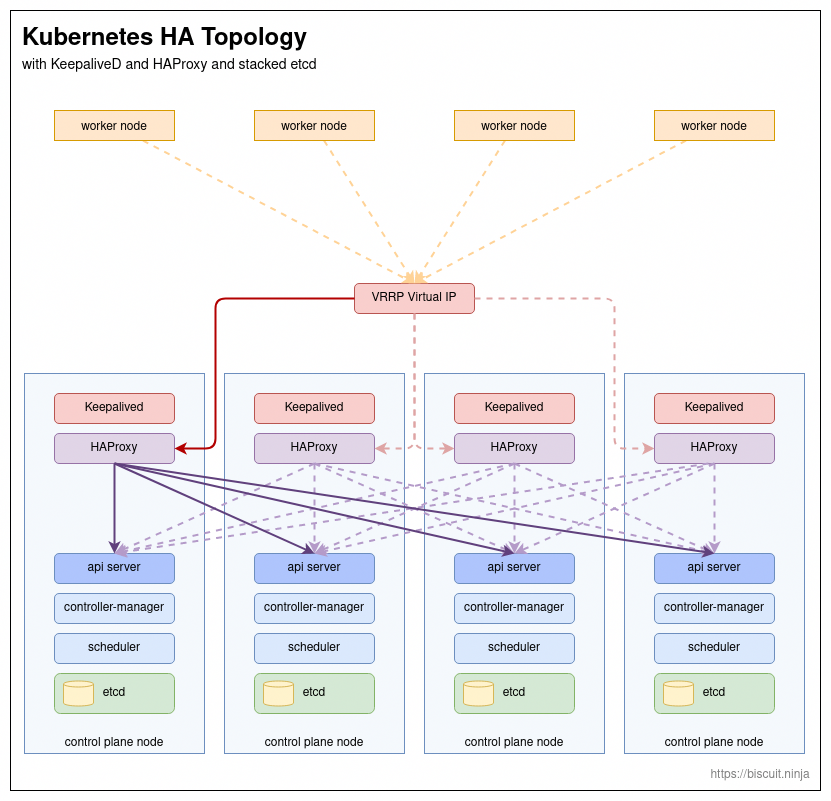

High Availability

Resilience, redundancy and high availability are all very important to me, professionally. Learning to implement Kubernetes with high availability and redundancy is an important aspect of this exercise.

Hence using Keepalived and HAProxy to provide a virtual IP and load balancing to the control plane in this Kubernetes deployment.

Kube-Vip can be used instead of Keepalived and HAProxy to provide both a virtual IP and load balancing.

I was concerned that using Kube-Vip makes the availability of a Kubernetes cluster too self-dependent and elected to make that provision independently.

I have opted for a stacked etcd deployment. The alternative is a distributed etcd cluster. etcd serves as a key value store for Kubernetes cluster information.

Example Ansible Playbook

An example Ansible playbook is hosted over on GitLab.

There are no variables to set or change. The Ansible hosts file can be tailored to reflect the number of hosts you are provisioning.

Prerequisites

This example playbook, as with all my Ansible examples has been written and tested using Debian.

I have run this playbook using and against hosts running both Buster and Bullseye.

Hostnames and DNS

The roles in the example playbook do rely on a few things:

- Hostnames for control plane nodes are prefixed

k8scpand they are suffixed with a number,01,02,03and so on. So you may usek8scp01,k8scp02andk8scp03etc. as your control plane nodes. - Hostname for the worker nodes are prefixed

k8sand again suffixed with a number. So you may usek8s01,k8s02etc. for your Kubernetes worker nodes. - The control plane nodes need to be resolvable by DNS, using their hostname. Essentially

dig k8scp01.local.netshould return a valid IP address, where for example,local.netis your local network - A DNS A record for

k8scpneeds to resolvable. This record will point to the virtual IP managed by Keepalived.

Python Modules and Packages

To use this example playbook, the dnspython Python module and the dig utility are required on the host from which Ansible is pushed. To satisfy these dependencies on Debian, for example, run:

sudo apt install -y python3-dnspython bind9-dnsutils

Ansible Roles

To build this cluster, I have created two roles:

- k8sControlPlane to build the Kubernetes control plane nodes

- K8sNode to build the worker nodes

There should be at least 3 control plane nodes for maintaining quorum and tolerating failure. A cluster with 4 control plane nodes can tolerate 2 control plane node failures.

It is possible to remove the master taint from the control plane nodes and use them for hosting pods. This command will remove the master taint from all the nodes which have it:

kubectl taint nodes --all node-role.kubernetes.io/master-

If you choose to remove the master taint from your control plane nodes, making them in-effect double up as worker nodes, then you can dispense with the worker nodes and ignore the k8sNode role. Please be advised that this is not recommended in a production or critical environment1.

k8sControlPlane

There are 9 sets of tasks included in this role:

setAdditionalFacts.ymldetermines and sets facts required by later tasks, including thecontrol_plane_ip.packages.ymlinstalls prerequisite packages for the role on the Kubernetes hosts.installAndConfigureKeepalived.ymlinstalls Keepalived and creates the VRRP Virtual IP for the control plane (control_plane_ip).installAndConfigureDocker.ymlinstalls Docker which is used as the Kubernetes OCI2 container engine.installAndConfigureKubernetes.ymlconfigures the host for running Kubernetes and then installs the Kubernetes packages.inititialiseFirstControlPlaneServer.ymlcreates the cluster with the initial control plane node, always the one which is suffixed01.installAndConfigureCalico.ymlinstalls the Calico Container Networking Interface (CNI) on the cluster.joinAdditionalControlPlaneServer.ymljoins additional control plane servers (02,03etc.) to the Kubernetes cluster.

The tasks in initialiseFirstControlPlaneServer.yml need to be run before any additional control plane nodes can be joined to the cluster. Therefore it is important that the first control plane node, for example k8scp01, is included in the first run of the Ansible playbook.

Kubernetes needs a network plugin3 in order to provide network interoperability to services/containers. These come in two flavours, CNI or Container Networking Interface plugins4 which adhere to a specification and the more basic Kubenet plugins.

I have opted to use the Calico CNI plugin as it looks to be one of the most comprehensive and flexible Network plugins currently available.

Both the initialiseFirstControlPlaneServer.yml and joinAdditionalControlPlaneServer.yml task sets include tasks for setting up the .kube folder, for both the root user and the ansible_ssh_user so that they can administrate the cluster with kubectl.

K8sNode

This role is almost a subset of the tasks in the k8sControlPlane role, which makes it simpler.

There are just 5 sets of tasks included in this role:

setAdditionalFacts.ymlpackages.ymlinstallAndConfigureDocker.ymlinstallAndConfigureKubernetes.ymljoinKubernetesCluster.ymljoins the node to the Kubernetes cluster

The tasks on which I’ve not provided additional comments above, do the same job in both this role and the k8sControlPlane role.

joinKubernetesCluster.yml is very similar to joinAdditionalControlPlaneServer.yml in the k8sControlPlane role. However it omits the uploading of PKI material necessary for creating a control plane node, omits --control-plane flag on the kubeadm join string and creation of a .kube folder as no Kubernetes administration can be conducted from a regular worker node.

I experimented both with combining the two roles and using some common tasks. In the end I settled two descrete roles, concluding that duplication was less evil than complexity.

If you prefer, you can move packages.yml, installAndConfigureDocker.yml and installAndConfigureKubernetes.yml into a common location and include them in both roles from that location.

Some of facts set in setAdditionalFacts.yml are common to both roles, so you may possibly want to refactor that too.

Conclusion

It is hard to draw any conclusions so early in the discovery phase of any new (to me) technology.

Kubernetes shows a lot of promise, and for many organisations is proven, as a technology for managing containerised services.

At some point, I look to containerise more of the services running on my own home network, reducing the burden of maintaining so many discrete virtual and physical hosts. However, providing a resilient and secure Kubernetes infrastructure does require, in itself, running a considerable number of nodes.

Perhaps some compromises have to be made for home use, for example running a single control plane node, or removing the master taint from a set ofcontrol plane nodes and using them all to run pods. Perhaps lightweight alternatives like k3s make more sense?

In the near future, I intend to investigate re-creating this example playbook in Terraform, look into providing storage for Kubernetes containers and work out how to effectively monitor Kubernetes and Kubernetes hosted services.

References

- kubernetes.io - Docs - Kubernetes Components

- kubernetes.io - Docs - HA Topology

- kubernetes.io - Docs - Creating a cluster with kubeadm

- kubernetes.io - Docs - Taints and Tolerations

- projectcalico.org - Docs - Kubernetes - Getting Started

-

Open Container Initiative. See opencontainers.orghttps://opencontainers.org/). ↩︎

-

See this for more information on Kubernetes Network Plugins. ↩︎

-

Container Network Interface specification. See [this](https://github.com/containernetworking/cni] project on GitHub for more information. ↩︎